TeleScript Software

Optimizing Performance for Windows 11

In earlier days, computer performance could largely be predicted by examining hardware specifications, and even from manufacturer’s model numbers. Today, virtually all of a particular brand’s offerings, are highly configurable offering a choice of processors, display cards, memory capacity, and internal drive specs. In addition, computers today are “driver centric” – that is the performance of hardware might depend more on driver design than on the actual physical device specs. For this reason, it’s difficult and uncertain to make a reliable suggestion when customers ask for a recommendation of a computer model that will work well with TeleScript Software.

The only real test is to buy and try. Since there are hundreds if not thousands of potential candidates, this is obviously not possible. Nevertheless, there are some general properties that make a specific system more likely to provide good performance. Over the years at Telescript West, Inc., we have configured around several hundred systems for customers using a variety of brands, using Operating systems from MS-DOS, and Microsoft Windows OS versions starting at Windows 95, Windows Vista, Windows 7, Windows 8/8.1, Windows 10, and, most recently, Windows 11. Each computer and each OS version has presented some unique considerations. In this document, I’ve summarized my findings for best performance on Windows 11.

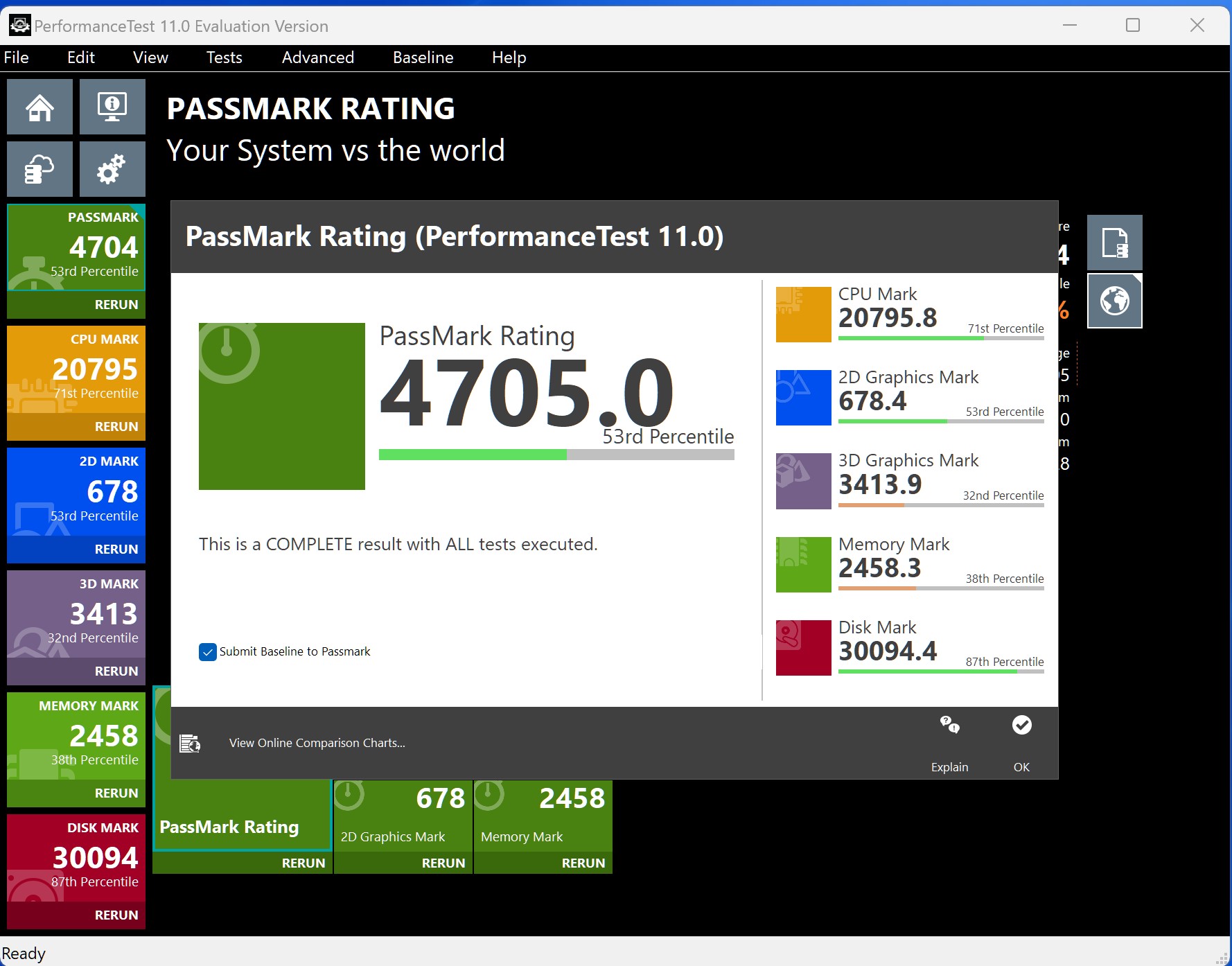

Most experimental data is derived from the Performance Test Software available from PassMark.com. Though there are several available benchmarking applications, PassMark is the one that I've found best for TeleScript Software, because the others seem to overlook 2D Graphics performance which is essential for smooth scrolling in TeleScript ProNEWS and TeleScript AV (TeleScript TECH is more dependent on 3D Graphics and is very forgiving of hardware configuration.) In addition, a large, searchable database is available on PassMark.com, comparing performance data from various system components. I highly recommend this web site and their inexpensive, comprehensive software for assessing computer performance.

I. Selecting a Computer

Higher price is not always better. There is not always a correspondence between price and performance – at least not specifically with TeleScript Software. Some high end, so called “gaming computers”, perform no better then some lower end computers.

What is likely to be important? Although in the driver-centric world, as explained above, and the manufacturer’s board design, the same components may perform differently in different systems. In general, here are some hardware specs that might be significant:

1. More RAM is better. Why? Because more memory will mean fewer system requests for virtual memory. A virtual memory request from the OS will involve a read and/or write to the system drive. Drive access is always a high priority OS request and may interrupt a thread important to smooth scrolling. On today’s complex multi-core CPUs, the frequency of virtual memory requests, and the impact on program performance is not as predictable as in earlier single core systems, but experiments show that more RAM always performs better with TeleScript Software.

2. A faster hard drive is important. Largely, for the same reason that more RAM helps system performance, it’s largely about virtual memory. A fast SSD always performs better in experiments. Be certain not to run short of drive space, which will severely impact your computers performance.

3. 2D Graphics performance is more impactful than 3D Graphics performance. The algorithms used to display prompting text, and to smoothly update the scrolling display, are more dependent on 2D performance. I have used software provided by PassMark to find display hardware that reportedly has good 2D performance. Some systems, such a some gaming computers, that have excellent 3D performance, important for games and graphics, have poorer 2D specs. The display's 2D Graphic is often used for text display and is at the heart of TeleScript Software performance.

4. CPU speed is helpful, but not as critical. In earlier computers, the CPU was often involved in display buffering, but I don’t see any evidence that modern display card design uses this older technology.

5. High resolution displays are NOT recommended. A display with a resolution of 1920x1080 is more than adequate for a good teleprompter display on all common monitors used far this application. The display card must fill EVERY pixel of an LCD display when the text scrolls. Some drivers may have algorithms to do minimal updates, but in a prompting script, virtually every displayed pixel will change as text scrolls.

It’s important to note that neither scaling nor changing resolution via the display card’s control app, or Windows settings will actually change the monitor resolution. In fact, setting lower resolution – such as setting the “resolution” of a high DPI LCD monitor to a lower perceived resolution – may actually negatively impact scrolling performance. In order to make screen content look larger, the display driver, or possibly the OS, uses dithering algorithms to display screen images. This adds an extra layer of processing for each pixel displayed – every pixel STILL has to be displayed. The LCD pixel mask can’t actually change.

The bottom line is that more pixels require more time to display. For example, a 2560x1600 laptop montor has more than 4 million pixels to write. A 1920x1080 LCD display has only about 2 million pixels – half as many. All else being equal, this means the computer will take twice the time to write the display. Display resolution is a major contributor to scrolling smoothness.

If you are using an external display, which is the normal teleprompter configuration, the same criterion applies regarding display resolution. Also, although what goes on inside any display card is a black box and the methods are not public, duplicating the display – used to be called “cloning” – is achieved by filling one display buffer and “blitting” to the second display buffer. However the second display is achieved, it’s not just a ‘Y’ cable… there are two separate display buffers that must be filled and the size of the buffers depends upon the display physical resolution. More pixels means more time.

6. Update drivers on any system. On any computer system, it’s vitally important to update drivers for all components, but particularly the video/display driver. On some computers, the manufacturer provided driver may be installed. On others, notably Dell computers, require the manufacturer’s customized drivers. Unfortunately, in some cases, customized drivers may lag the chip manufacturer’s drivers. I have encountered many cases in which a driver update fixes a performance issue or a screen artifact. To repeat, today’s systems are driver-centric – identical hardware may perform better or worse depending upon the system drivers.

II. System Tweaks

In earlier iterations of Windows and associated drivers often provided some simple adjustments that were almost certain to provide acceptable performance, there are few, if any, exposed display configurable items in Windows 10/11. As an example, on Windows 7, it was possible to set the color depth to 16 bits, which cut the time required to draw the display by a factor of 2. Below are some system components that may be configured to improve performance.

1. BitLocker drastically affects graphics 2D performance. BitLocker is an effective tool for preventing unauthorized data access and has been around since Windows Vista. However, on some Windows 11 systems, it is enabled by default, whereas on previous versions, it was enabled by the user. In performance tests with BitLocker enabled vs. disabled, I discovered that graphics 2D performance was impacted by almost 50%. This is shown in following images from PassMark software.

BitLocker Disabled

BitLocker Enabled

The impact on system performance is apparent:

CPU Mark reduced by 13%

3D Graphics Mark reduced by 20%

Memory Mark reduced by 10%

Disk Mark impact less that 1%

Overall system impact over 17%

HOWEVER, the all important 2D graphics mark is impacted by 46%.

The graphics 2D performance reduction is almost equivalent to that of double screen resolution. As mentioned, scrolling smoothness is highly dependent on 2D graphics performance.

Obviously, discretion must be used to determine if the benefits of BitLocker overshadow its inevitable impact on TeleScript Software performance. My recommendation is to enable BitLocker for removable drives but to disable for the primary hard drive. For best security, disable internet access on critical prompting computers. TeleScript Software does NOT require internet access. Private networks can be used to provide script edits. All imported data should be carefully checked for malware, which can be carried by many common data files. Also, as has been proven repeatedly in recent times, there is no existing technology that can provide 100% protection against hackers other than air-gapping critical systems. Clearly, prompting the President is a critical application. So, disable BitLocker and air-gap the prompting system.

2. Memory Integrity and Core Isolation may degrade performance. I do not have objective evidence that Memory Integrity degraded TeleScript Software performance, however, a subject assessment is that it does have a negative impace, but not nearly to the degree that is measured from BitLocker or high DPI displays. Disabling MI should be considered as a possible performance issue.

3. All startup programs should be disabled. I do not suggest disabling malware protection. On the other hand, I HIGHLY recommend using PassMark software to measure the impact of Anti-Virus and other malware protection. Personally, I think Microsoft’s malware protection is sufficient if it is conscientiously updated for the most recent threats. Try to eliminate all applications running in the background. Even innocuous seeming system apps such as Indexing may have significant impact.

4. Keep the OS updated – but be aware of potential issues. Despite press reports of bugs in updates, it’s my opinion that these reports can generally be attributed to competition gone wrong. Even the highly publicized CrowdStrike issue, which many sources tried to blame on a Windows issue, was in truth, due to the publishers inadequate testing. This is not to say that there are no problematic updates, or that patched system should not be thoroughly tested before being put in to use in critical applications. However, in general, updates are absolutely essential for a best performance. Test should include checks that previously set system configurations (such as BitLocker status) have not been affected by the update. As previously mentioned, drivers should be updated as frequently as needed.

5. All systems have settings that can only be performed in the system BIOS. Just like the system drivers, the system BIOS should be kept up to date. How this is done depends upon the individual system. Some systems have detailed BIOS settings that will probably require some research to understand – manufacturers are known to use terms specific to their own architecture as if they were common generic properties. Be certain to research any BIOS setting before you make a change, and save the existing configuration for restoration if needed.

These suggestions are largely based upon measurements using PassMark software and the experience of setting up a relatively small number of computer systems two of which are detailed below. This is neither comprehensive – there may be other effective tweaks or configurable items – nor is are the suggested items guaranteed to provide consistent results, but all the computers I have set up using these guidelines have had performance as good or better as has been experienced with Windows 10 and earlier OS iterations.

SYSTEM 1 – Dell 9540 (new computer setup)

OS Name Microsoft

Windows 11 Pro

Version 10.0.22631 Build 22631

System Dell

Model Latitude 9450 2-in-1

Processor Intel(R) Core(TM) Ultra 7

165U, 2100 Mhz, 12 Core(s), 14 Logical Processor(s)

BIOS

Version/Date Dell Inc. 1.4.1, 6/11/2024

BIOS Mode UEFI

Installed

Physical Memory (RAM) 16.0 GB

Total Virtual Memory 16.1

GB

Available Virtual Memory 7.86 GB

[Display]

Adapter

Type Intel(R) Graphics Family, Intel Corporation compatible

Adapter

RAM 128.00 MB (134,217,728 bytes)

Driver

Version 31.0.101.5382

Resolution 2560 x 1600 x 60

hertz

Bits/Pixel 32

Notes: BitLocker

disabled, delivered with Windows 11/Pro, scrolling very good,

occasional brief hitch indicative of a virtual memory access at about

10-15 second interval, external display at 1920x1080 no artifacts.

SYSTEM 2 – Dell Latitude 5420 (refurbished system, upgraded from Win10)

OS Name Microsoft

Windows 11 Pro

Version 10.0.22631 Build 22631

System

Model Latitude 5420

Processor 11th Gen Intel(R) Core(TM)

i5-1145G7 @ 2.60GHz, 1498 Mhz, 4 Core(s), 8 Logical

Processor(s)

BIOS Version/Date Dell Inc. 1.36.2, 4/1/2024

BIOS

Mode UEFI

Installed Physical Memory (RAM) 8.00

GB

[Display]

Adapter Type Intel(R) Iris(R) Xe

Graphics Family, Intel Corporation compatible

Adapter RAM 128.00

MB (134,217,728 bytes)

Driver Version

31.0.101.5333

Resolution 1920 x 1080 x 60 hertz

Bits/Pixel 32

Notes: BitLocker disabled, system updated from Windows 10, scrolling excellent on internal and external monitors,